Table of Contents

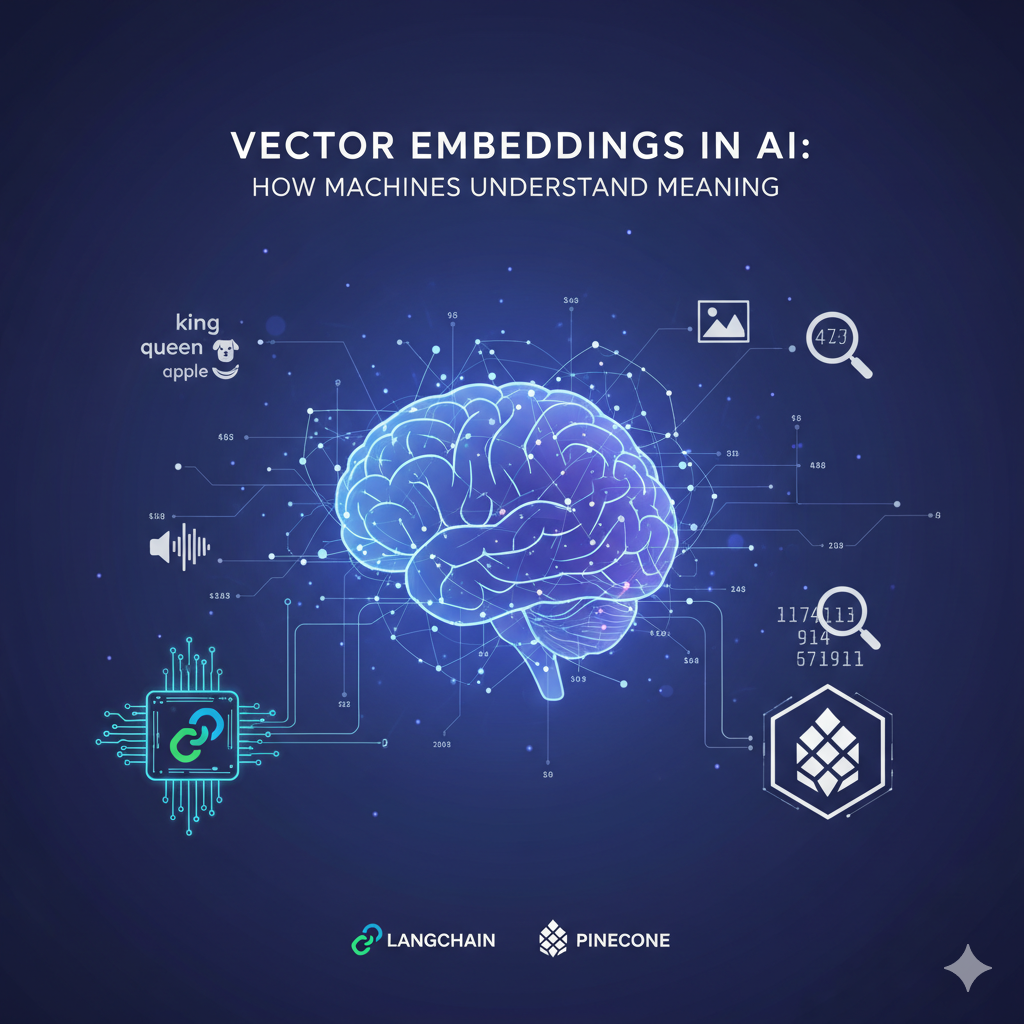

Vector Embeddings in AI: How Machines Understand Meaning

Artificial Intelligence (AI) has made incredible progress in recent years — from chatbots that can hold human-like conversations to recommendation systems that know exactly what you want to watch next.

But have you ever wondered how AI systems actually “understand” meaning?

How do they know that the words “king” and “queen” are related — or that “cat” and “dog” belong to the same category of animals?

The answer lies in one of the most powerful concepts in modern AI and machine learning — vector embeddings.

In this guide, we’ll break down what vector embeddings in AI are, how they work, why they’re important, and how frameworks like LangChain and databases like Pinecone use them to make AI applications smarter.

What Are Vector Embeddings in AI?

Let’s start simple.

A vector embedding is a way of representing data (like words, images, or sentences) as a list of numbers — called a vector.

Instead of storing text as plain words, AI converts it into numerical form, so that mathematical operations can be performed.

For example:

- The word “apple” might be represented as

[0.12, -0.45, 0.88, 0.63]. - The word “banana” might be

[0.10, -0.43, 0.86, 0.60].

These numbers capture the semantic meaning of the words — meaning “apple” and “banana” will have similar embeddings because both are fruits.

This numeric form allows AI models to measure similarity, detect relationships, and understand context between data points.

So in simple terms, vector embeddings in AI are the language of meaning for machines.

The Intuition Behind Embeddings

To understand embeddings better, imagine a map of ideas.

Every concept — a word, a sentence, or even an image — becomes a point in a high-dimensional space.

- Similar ideas are close together.

- Unrelated ideas are far apart.

This “map” is called the vector space or embedding space.

For instance:

- The vectors for “Paris” and “France” will be close.

- The vector for “Paris” will also be close to “London”, since both are capital cities.

This mapping of meaning is what enables AI systems like ChatGPT or Google Search to deliver contextually relevant results rather than just keyword matches.

How Are Vector Embeddings Created?

Vector embeddings are usually created using deep learning models.

Here’s a simple overview of how it works:

- Training a Neural Network – AI models are trained on huge datasets of text, images, or audio.

- Learning Relationships – During training, the model learns to position similar items closer in vector space.

- Generating Embeddings – Once trained, the model can convert new data into vectors that capture semantic meaning.

Popular Embedding Models:

- Word2Vec (by Google): One of the first models to capture word relationships.

- GloVe (by Stanford): Learns embeddings from word co-occurrence statistics.

- BERT (by Google): Creates contextual embeddings for sentences.

- OpenAI Embeddings: Used in GPT models and APIs for modern AI applications.

These models produce embeddings that can be hundreds or even thousands of dimensions long — each dimension representing a unique feature of meaning.

Why Vector Embeddings Matter in AI

Without embeddings, AI systems would treat every word or concept as unrelated — losing all sense of meaning.

Vector embeddings allow machines to:

- Understand context – Know that “bank” in “river bank” differs from “bank” in “money bank.”

- Find semantic similarity – Retrieve related content, even if different words are used.

- Power recommendations – Suggest similar products, songs, or articles.

- Enable AI memory – Help chatbots remember and relate past conversations.

Essentially, embeddings bridge the gap between human language and machine logic.

That’s why vector embeddings in AI are the foundation of many intelligent systems today.

Real-World Examples of Vector Embeddings

To make it more relatable, here are some real-world examples of how embeddings are used every day:

- Chatbots and Virtual Assistants

→ When you chat with ChatGPT or Alexa, embeddings help the AI understand your intent, even when phrased differently. - Google Search and YouTube Recommendations

→ Google doesn’t just match keywords — it understands your search’s meaning using embeddings. - Spotify and Netflix Recommendations

→ These platforms embed user behavior and content metadata to suggest what you’ll likely enjoy next. - Fraud Detection

→ Financial institutions embed transaction patterns to find unusual behaviors and prevent fraud. - Healthcare Diagnostics

→ Medical data embeddings help AI models find patterns in symptoms or images to predict diseases.

How LangChain Uses Vector Embeddings

LangChain is one of the most popular frameworks for building AI applications powered by large language models (LLMs).

But what makes LangChain truly powerful is how it uses vector embeddings to create memory and retrieval mechanisms for AI.

Here’s how it works:

- Convert Text to Embeddings – LangChain converts text documents or chat histories into vectors.

- Store in a Vector Database – These embeddings are stored in databases like Pinecone, Weaviate, or Qdrant.

- Retrieve Similar Contexts – When a user asks a question, LangChain finds related vectors from the database.

- Pass to the LLM – The retrieved context is passed to the AI model (like GPT) to generate a relevant, accurate answer.

This technique is called Retrieval-Augmented Generation (RAG) — a method that allows AI to “look up” relevant information before responding.

So, LangChain uses vector embeddings as the memory backbone of smart AI systems.

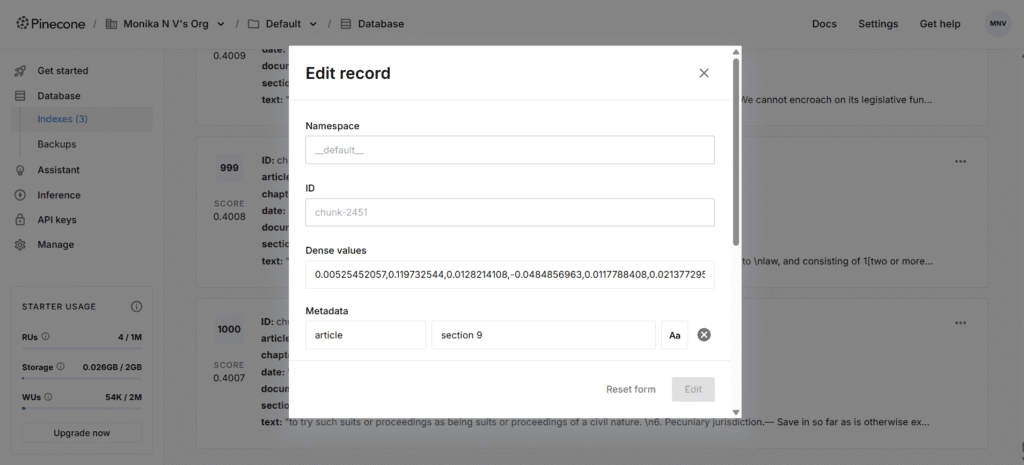

How Pinecone Uses Vector Embeddings

While LangChain manages the logic, Pinecone handles the storage and search part of embeddings.

Pinecone is a vector database designed to store, index, and query millions of embeddings efficiently.

Here’s how Pinecone fits into the pipeline:

- It stores embeddings generated by AI models.

- It allows you to perform vector similarity search — finding the closest vectors to a query.

- It scales automatically, handling massive datasets in real time.

For example, if you ask a chatbot built with LangChain and Pinecone:

“Who founded Apple?”

The system converts the question into a vector, searches for similar context vectors (like “Steve Jobs founded Apple”), and returns the best-matching result.

That’s how modern AI applications can respond intelligently — thanks to vector embeddings and vector databases working together.

Applications of Vector Embeddings in Modern AI

Let’s explore where embeddings are used today:

| Application | Description |

|---|---|

| Semantic Search | Retrieve results based on meaning, not just exact keywords. |

| Chatbots & Conversational AI | Maintain memory and context during conversations. |

| Recommendation Engines | Suggest related products, videos, or articles. |

| Image & Audio Retrieval | Match similar images or sounds using embeddings. |

| Anomaly Detection | Identify outliers in complex datasets. |

| Document Clustering | Group related documents or articles automatically. |

The versatility of embeddings makes them one of the most powerful tools in machine learning today.

The Future of Vector Embeddings in AI

As AI models evolve, so will embeddings.

We’re moving from static embeddings (like Word2Vec) to dynamic contextual embeddings (like OpenAI or BERT).

Future models will even integrate multi-modal embeddings, combining text, image, and sound into unified representations.

These advanced embeddings will make AI systems:

- More human-like in understanding nuance.

- More efficient in memory and retrieval.

- More adaptable across languages and data types.

In short, vector embeddings are shaping the next generation of intelligent systems.

Key Takeaways

Let’s recap the main points about vector embeddings in AI:

Vector embeddings represent data as numerical vectors that capture meaning and context.

They allow AI to understand similarity, relationships, and semantics.

Tools like LangChain use embeddings for AI memory and retrieval.

Databases like Pinecone store and search embeddings efficiently.

They power core features like semantic search, recommendations, and intelligent chatbots.

Without vector embeddings, AI would just be pattern-matching — not understanding.

Conclusion

Vector embeddings in AI are the invisible backbone of every intelligent system you use today — from search engines to chatbots.

They transform messy human data into structured, meaningful patterns that machines can reason about.

When combined with frameworks like LangChain and vector databases like Pinecone, embeddings unlock powerful applications — enabling AI to retrieve knowledge, maintain context, and generate accurate answers.

In short, if AI were a brain, vector embeddings would be its memory and understanding.

As we move forward into the age of intelligent agents and generative AI, mastering embeddings isn’t just optional — it’s essential.

Image Source: Pinecone Official Website

You May Also Like

If you found this blog interesting, you might enjoy exploring more stories, tips, and insights in our